Surrogate DDS to DDL Modernization - Example

One of the issues with legacy RPG code is having to re-compile all programs that use a physical file, if it changes.

If your team is moving towards Agile development then Surrogate DDS to DDL database modernization is a good first step. You will be able to convert a file at a time to use the more advanced SQL capabilities. Each file can then be tested and promoted into UAT and/or production individually before you even think about further development.

The advantages are that you will not be required to re-compile all the RPG programs that reference a particular file, they will still run as they are.

In the surrogate method, an SQL table with a new name is created that contains all of the existing columns from the original physical file plus any new columns if you need them. A DDS logical file is created with the same name and record format as the old physical file and the logical file is created over the new SQL table. Additionally, any existing DDS logical files will remain but also point to the new SQL table. Additional SQL indexes and views, with new names can also be added.

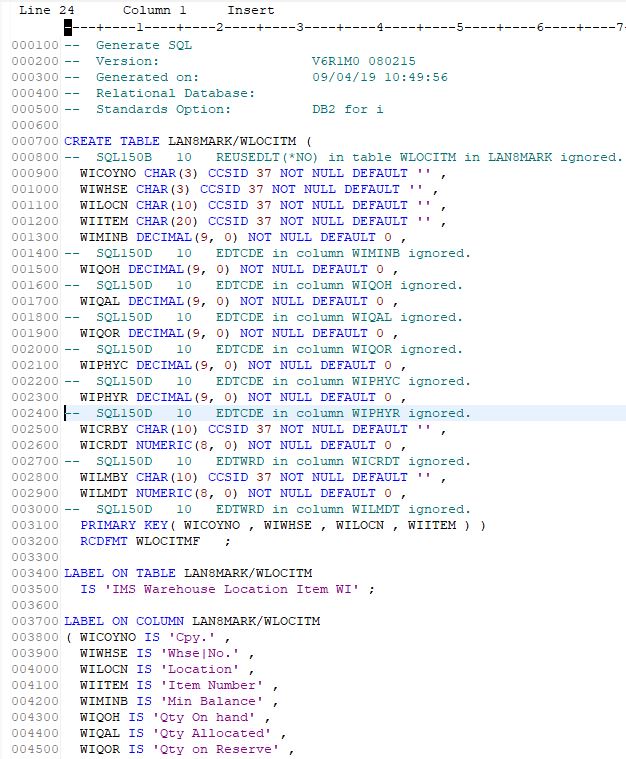

Step 1 - Create the DDL from an existing file.

To do this you will need a utility that creates DDL from a DDS defined file. I used the API provided by IBM (QSQLGNDDL) and a great article written by Simon Hutchinson on RPGPGM.COM which explains this. I used the fixed format version as my old dev box is still at V6R1, it will be retired very soon.

Here is what it generated.

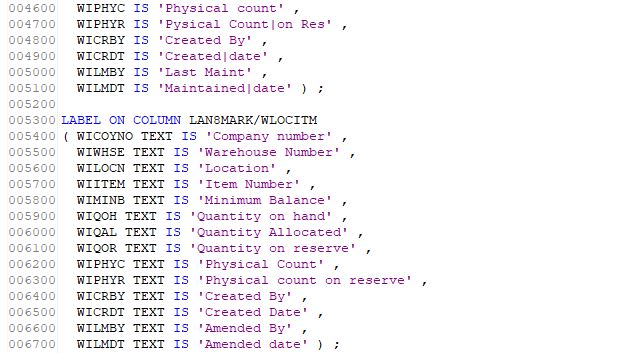

Step 2 - Modify the Generated Source

I added SQ to the end of the table name obviously it cannot be the same name as the old physical, I also dropped the library qualifier and used my Change Management Product of choice MDCMS, to create the table. MDCMS works with all SQL type codes to build/rebuild the relevant SQL objects. You should of course use change control if you have adopted it. Just be sure you modify the library names in the generated SQL if you don't use change control software.

To generate the SQL manually use RUNSQLSTMT from the IBM i command line.

So here is my work list with the new table, the old physical converted to a logical and an existing logical which I will point to the new SQL Table.

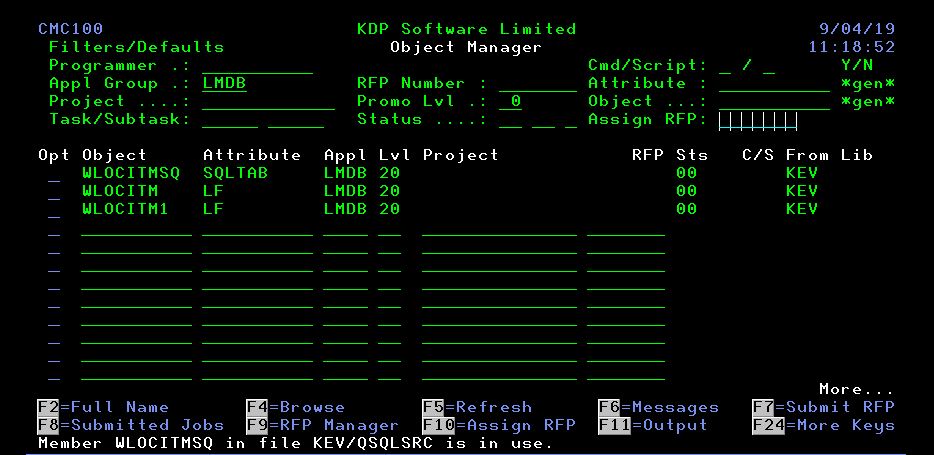

The change control software I am using will also copy any data in the original file into our new DDL defined file before replacing it, this is done by specifying the data origin option which is of course is an MDCMS feature. You will need to make sure you are able to copy the data when you convert the original physical to a logical. Again this is one of the benefits of automating the changes with a change control tool.

Make sure you refer to your CM's system manual for the equivalent settings, or if you are doing it manually - Good Luck !!

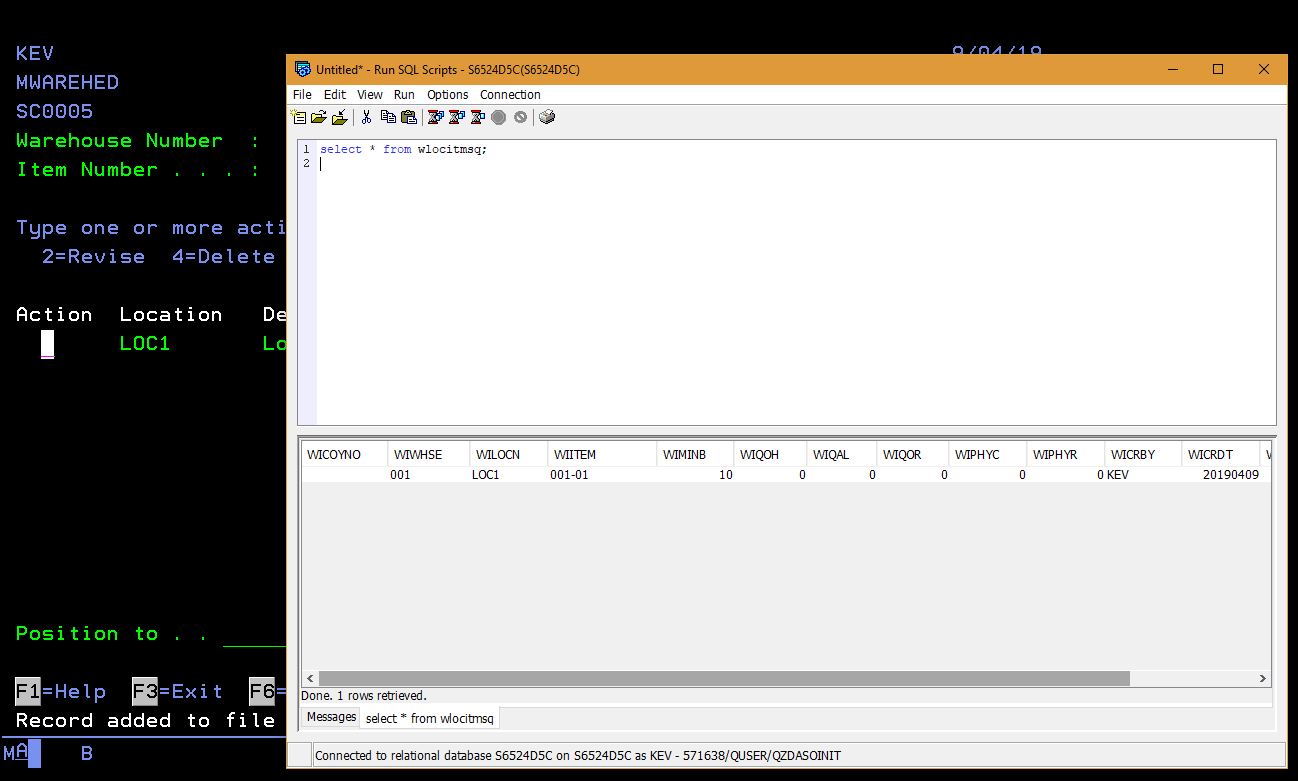

My old RPG program MWAREHED is now populating the new SQL table without the need to recompile it.

The down side is that you are restricted to the original short field names but at least moving forward you can start separating the database from the old RPG application and building a database that is defined in SQL, with all the benefits that brings.

Of course the ideal route to database modernization is to re-write the database in it's entirety using only SQL, but we don't live in an ideal world, sometimes it is too expensive to do this, if you just want to add, say a date field to your physical file.

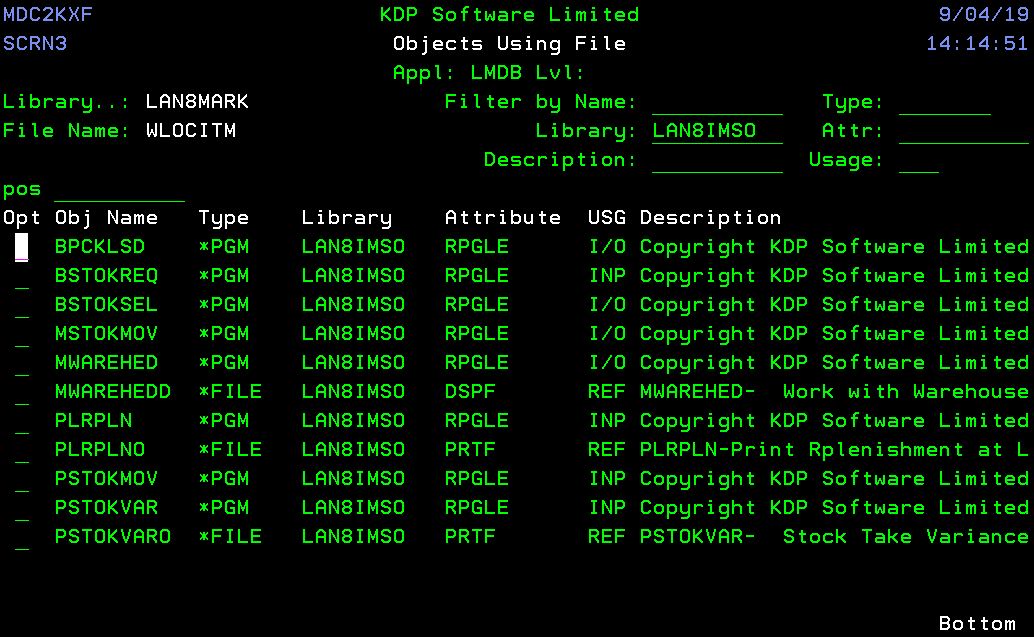

At least this way you can add the date field to the newly defined SQL definition and only change the programs that require the use of the additional field. Imagine changing a master file that is referenced throughout your application. In the example above I would have had to check out and re-compile eleven programs that referenced these files. Instead I changed two file definitions and created one SQL member from an existing physical file.

Here is my XREF list.

I am able to change the database in less than an hour, compile the three programs and promote them forward to test. After testing that all my programs still work with the new SQL table, I can promote these tables into production. If anything did go wrong I can immediately back them out.

Thinking about Agile, modernizing small parts at a time allows me to compile test and promote all objects in the space of a short time as opposed to the waterfall method where I compile/promote three files and the eleven programs, of course with bigger applications this scales up and the amount of work increases.

Change control is a critical factor in this, in that all changes are recorded, the move of objects/source is done automatically and any failures will result in the original objects being restored.